Have you ever wondered why if confronted with any illness symptoms that appear even a bit abnormal, we prefer to consult with a doctor in a large hospital only, even though a more competent doctor may have a clinic next door itself.

And have you ever wondered what that preference has to do with Artificial Intelligence (AI) and Machine Learning (ML)!

To explain that, let me recount what happened to me twenty-one years back. I vividly remember that incident from 1997 that I can now relate well to the significance AI and ML are having in healthcare currently!

I was being examined by a leading physician at Agra (who had an experience of over twenty-five years and had a roaring practice) for a pain near my left toe. The conversation progressed as follows:

I: Doc Sahib, please have a look at my left toe. I am troubled by a severe pain for over last three weeks. I cannot put my foot down or wear the shoes even.

Doctor: Did you ever have this type of pain before?

I: No

Doctor: (Examining the pain area closely) Do you feel any irritation, or feel any urge to scratch that area?

I: No.

Doctor: Do you eat lot of red meat?

I: No! I am a vegetarian.

Doctor: Do you like to eat lot of tomatoes, or cheese, or spinach or any other high protein foods.

I: Yes. Very frequently have cheese-spread, and baked beans in breakfast, and of course tomato in some form is generally there in all meals.

Doctor: (Prepares a slip for the diagnostic lab) Please have the uric acid blood test done as I suspect you have gout.

I: Thanks, Doctor. Will come back later with the test results.

(Later during the day)

I: (Handing over the lab report) Here Doc Sahib. Please have a look at the report.

Doctor: (Going through the test report) That is what I thought. You have gout! Your uric acid level is 12.4 mg/dl which ideally should have been between 3.5 mg/dl – 7.0 mg/dl. I will immediately start the medication.

(The doctor then spent few minutes to explain what gout was and how it impacted my health, and my lifestyle.)

I: Any restrictions on diet?

Doctor: Yes. For the time being completely stop eating your favorites – cheese, tomato, spinach and all dals (lintels) except ‘moong’ dal.

The treatment started that same day, and within three weeks the pain had substantially subsided, and gout was well under control.

What I have narrated above was actually the Step 3 of the treatment plan that I had followed for almost three weeks before I met that doctor at Agra.

- The symptoms – After having spent more than five years in Bangalore I had just moved to Noida and had started to adjust to a different living (and professional) environment. One day I woke up to acute pain in the area near my left toe. It appeared a little swollen and made it difficult for me to even wear the shoes.

- Treatment Step 0 – As usually happens with all of us, initially I tried out some home remedies only, like soaking the leg in warm water and taking some pain killers. That was to no avail and the pain persisted.

- Treatment Step 1 – A few days later I had to attend a family gathering where a relative of mine, fresh out of college after completing her course in medicine, had a look at it and opined that it could be some allergic reaction due to change of location (from Bangalore to Noida) and prescribed some tablets. However, the pain still persisted and even increased after few days of that treatment.

- Treatment Step 2 – It was then that I decided to consult a practicing physician, and went to a clinic just across the road where we lived. He examined the pain area and diagnosed it as some sort of inflammation and advised putting poultice for few days. Even after several days of that treatment, the pain did not subside but actually aggravated.

- Treatment Step 3 – Experiencing no relief for over three weeks, I finally decided to consult my younger brother, a leading plastic surgeon at Agra, who took me to one of his colleagues who was a leading physician. What happened next I have already stated above.

Now after twenty-one years when I analyze that line of treatment, I realize that

- The young doctor who first advised me maybe had never seen such symptoms earlier and thus was not able to diagnose correctly.

- The physician I consulted next might have seen only a few patients with a similar set of symptoms that I had (but not with the same illness), therefore was not able to formulate the right questions to ask that could have led to the correct diagnosis from a set of possible outcomes arising from similar symptoms.

- However, the doctor at Agra with his vast experience, had obviously seen those set of symptoms several times earlier and had acquired sufficient I and L to treat such cases effectively.

- Thus, though all the three doctors were surely competent, what the first two doctors obviously lacked were

- the ability to apply their I (Intelligence) in (a) arriving at the correct diagnosis based on the symptoms they were presented with, and (b) subsequently determining an appropriate line of treatment; and

- the extent of L (Learning) that comes with experience of treating hundreds and thousands of patients with various types of symptoms possible that brings in the knowledge that what could be the possible diagnoses and what treatments worked or did not, and why or why not?

By applying AI and ML techniques and solutions in healthcare it may now become possible to make available the accumulated I and L – resulting from the large number of successful (and unsuccessful) treatments by various experienced doctors – to those competent but less experienced physicians.

With access to an appropriate AI/ML system, even a physician in a small clinic in a remote location could

- draw upon the accumulated experience of other successful doctors;

- be guided properly to arrive at the correct diagnosis and subsequently to determine an appropriate line of treatment; and

- confirm that the planned line of treatment is suitable for the medical profile of the patient. In case the patient’s medical profile is not readily available (like in case of emergency patients or admittances to trauma centres), AI/ML systems could caution the first medical responders on the possible complications (if any) associated with any planned line of treatment.

Effective use of AI/ML systems in healthcare can deliver sustained benefits for all relevant stakeholders:

For the patient:

- Assurance that the physician would arrive at a correct diagnosis, and would propose an appropriate and effective line of treatment with less or almost no margin of error;

- Obviating the need to rush to a larger hospital/clinic just because the symptoms are a bit abnormal; and

- Faster and more effective response from medics in emergency cases.

For the healthcare provider:

- Increased efficiency with lower turnaround time for patients;

- Faster and accurate diagnosis and effective treatment;

- Substantial reduction in unfair treatment cases; and

- Substantially faster and accurate response by first medical responders in emergency cases.

In the face of massive disruption taking place in healthcare space, and the frantic pace of medical data generation, any AI/ML system is likely to be soon become outdated, ineffective and irrelevant, if it is not constantly updating its Intelligence and is not constantly Learning.

Thus it is imperative that all instances of successes and failures, arising out of using any AI/ML system, are fed back into that system to ensure constant refinement of its algorithms. That will result in it providing even more accurate outcomes for future users.

Conclusion

From the above it is evident that an AI/ML system can be a powerful ally of a physician and its deployment should not be termed as “man against machine” by any means.

In my opinion, AI/ML technologies are still meant to assist the medical fraternity and are not really likely to replace doctors (at least in foreseeable future)!

Conversation with a hospital CEO

During a recent conversation with the CEO-Doctor of a multi-specialty hospital our discussion veered towards how data-driven decision-making using analytic insights could benefit the hospital. His response, typical of most of the CEOs (for that matter from any industry) was – Oh! I really don’t need any analytics! All the facts I need to run my organization are on my finger-tips!

My takeaway from that conversation were the two keywords ‘facts‘ and ‘fingertips‘! For running a successful organization, you do always need to have near real-time relevant and critical (may be up to ten, one for each fingertip!) facts on what is happening within the company. However, just the facts (measures) may not always be sufficient to arrive at a decision unless those are benchmarked against the desired performance and/or trends over different periods for those measures. Deployment of analytics enables the stakeholders to have that additional edge over the decision-making, by making that exercise based more on validated data than just a gut feeling.

That set me thinking on what could be those top key performance indicators (KPIs) which if available on fingertips (at the click of a button) could aid a CEO in achieving the organizational objectives more effectively, and what could be the ones relevant for a hospital CEO!

I presume that any hospital CEO’s top priority is to strive to earn the patients’ trust, and that is possible only if the hospital could meet and exceed patient expectations.

Meeting the patient expectations

What a patient expects from the hospital is a treatment that is effective, timely and fair. The following KPIs keep the hospital CEO and other stakeholders informed on how effectively that is happening?

Treating the patients effectively …

The top hospital stakeholders should be worried if higher % of patients who have been already discharged (whether out-patients from day-care or inpatients with hospital-care) return to hospital for re-treatment or re-admittance for the same ailment. That will show that either the initial diagnosis was flawed, or some critical elements were missed out while administering the treatment. Either way it would be matter of great concern for the hospital CEO, who should always be aware of the Re-admittance Index – % of discharged patients who required re-treatment or re-admittance.

… and timely …

One of the most critical performance indicator within a day-care hospital is the TAT, the turnaround time – the elapsed time between entry of the patient in the hospital (registration) and start of consultation of that patient by the physician. Other important TATs that are tracked within a hospital include – for a test being conducted, the elapsed time between the ordering of the test till the report collection, and most importantly for an inpatient, the elapsed time between the decision to discharge and the actual vacating of the bed. Inordinate delays in these lead to irritated patients, increased costs, and avoidable queueing issues too. Typically hospitals set internal benchmarks, or compare with any available industry benchmarks, to track the various TATs. In case of inordinate delays, hospitals could carry out a root cause analysis and take preventive and corrective actions.

What any hospital CEO should strive for is that the TAT Index for any given period is less than 5%, that means not more than 5% patient-visits experience a delay beyond a set benchmark in treatment or in discharge.

… and fairly …

I remember once a CEO of a hospital was concerned about if any of the eleven consultants in the hospital were at any time prescribing investigations and/or medicines that were not warranted for the observed symptoms and the medical condition of the patient. Periodic audit of all prescriptions comparing those prescriptions with a defined set of rules (lines of treatment) for corresponding symptoms will give a fair idea of the deviations if any. What a CEO has to do to control it, is to always ensure that the Unfair Treatment Index (% of possible deviations from an appropriate line of treatment) is kept below the minimum acceptable tolerance benchmark.

… and thus earning patients’ trust!

A hospital may expect that it has earned a patient’s trust by providing treatment that is effective, timely and fair, but it can really know that for sure by arriving at the Patient Satisfaction (P-SAT) Index only. P-SAT can be derived by analyzing the feedbacks received from the patients, results of internal surveys, and the comments (adverse or commending) on the social media. A prudent CEO always depends upon the P-SAT Index to accurately gauge the extent of the hospital’s success and reputation.

We have now understood that patients’ trust can be earned by providing effective, timely and fair treatment. However none of that is possible unless the hospital itself is run efficiently and profitably.

How does the CEO keep track whether the hospital is run efficiently?

Managing the hospital operations efficiently

For meeting and exceeding the patients’ expectations it is imperative that the hospital operations including administrative and clinical processes are efficient and stable. Primarily it means that the all the hospital resources are used optimally, and are available for use when needed. The above-mentioned TAT Index is one such KPI. The following other KPIs too provide an indication of a hospital’s operational efficiency.

Are the resources and infrastructure used optimally?

Hospital resources and infrastructure, if not used optimally, lead to lost opportunity, frittering away of resources, and most importantly increase in operating costs. The Management has to ensure that the various Wards, Operation Theaters, Labs, and various equipments, and even the service providers (human resources) are available for providing service to the patient when needed. Out of these various parameters, tracking of the bed utilization (% of hospital beds occupied at any given time) is considered very critical for any large hospital as it has a direct impact on the efficiency of that hospital. A consistently low bed utilization could mean among other things, either faulty planning (resulting in over-investment) or a low P-SAT. On the other hand a consistently high bed utilization could lead to severe strain on resources and maybe result in declining quality of service.

Thus it is imperative that the hospital CEO constantly monitor the Bed Utilization Index.

Are the patients kept in hospital for a period that is necessary and sufficient?

One of the most critical KPIs for a hospital is the Average Length of Stay (ALOS) of inpatients for specific types of ailments or procedures carried out. The hospital could compare its such averages with either the industry benchmarks, or internally set benchmarks. For example assume that for a specific operation procedure (including the pre-operation and post-operation in-hopsital care) the ALOS is 6 days. If elsewhere in the industry the ALOS for the same procedure is 7 days, that will mean either your administrative and/or clinical processes are more efficient than others or you may be missing out on some necessary hospital-care (a point not in your favor). On the other hand if the ALOS elsewhere is 5 days, that will mean either you are providing some additional necessary services that others are not offering (a point in your favor) or your treatment more often is less efficient (your processes take extra time and/or resources for the same procedure).

Either way the CEO should keep a close watch on ALOS to optimize the services provided under the various procedures offered by the hospital.

However, even an efficiently run hospital having earned it patients’ trust to may fail if it is financially weak.

Monitoring the financial health of the hospital

For a hospital to ensure efficiency in its operations, it is imperative that its finances are stable and profitable. Without that the hospital will not be able to sustain its efficient operations for a longer period. It is the hospital CEO’s prime responsibility to ensure that that does not happen. The hospital CEO can depend upon the following KPIs to keep a check on the financial health of the hospital itself.

Is the hospital earning enough on each patient-visit?

Whether you are an individual or an establishment, the universal fact remains that you cannot consistently spend more than what you earn if you have to sustain financially in the long-earn.

What is critical for the hospital Management is to know what is the hospital earning on an average for each visit that a patient makes to it for treatment. Once ARPV is known for a period, and is compared with the average cost of operations for that period (ACPV), the hospital CEO knows whether the hospital operations at the current levels are sustainable or not.

Trends of ARPV and ACPV over a period give sufficient insights to the CEO to arrive at fair pricing of services, and take steps to manage optimal utilization of resources.

However a strong ARPV or a manageable ACPV alone will not be sufficient for financial stability unless the cash management is also strong.

Are the insurance claims being settled in time by the insurance companies?

Once a CEO of a 100-bed hospital was complaining that though he knew that the hospital had been having a strong revenue stream during that period, he was finding it difficult to pay on time for even the relatively small purchases made for materials and services. Why was that? A quick look at the hospital accounts revealed that (as is typical of all medium-large hospitals) almost 75% of the hospital revenue was derived thru insured patients, provided care under cash-less treatment schemes. It was also found that a substantial portion of that money was blocked in over-due claims submitted to the insurance companies and remaining outstanding for various reasons. That meant that the cash-flow was heavily dependent upon the timely settlement of insurance claims.

Any prudent CEO keeps a tight watch on the number of days claim outstanding (DCO) with the insurance companies; monitoring closely the TPAs – Third-party Administrators – ensuring that the claims are settled by the insurance companies as per agreed contractual terms. Timely settlement of insurance claims results in improved and predictable cash-flows and strengthens financial stability.

A hospital CEO may track the above-mentioned KPIs and ensure that the hospital is earning patients’ trust, and is operationally efficient and is financially stable too. But the litmus test of any hospital’s reputation and success is when its performance is compared with its peers, the other similar hospitals in the geography or with the same specialization.

Where does the hospital stand when compared with its peers?

Several independent agencies periodically rank the participating hospitals based on various performance factors. The ranking could be geography-wise, type of hospital-wise, or specialty-wise.

For a CEO it is imperative that whichever ranking is most important for the hospital is thoroughly analyzed, and a proper strategy to improve/maintain the ranking in future put in place.

In conclusion

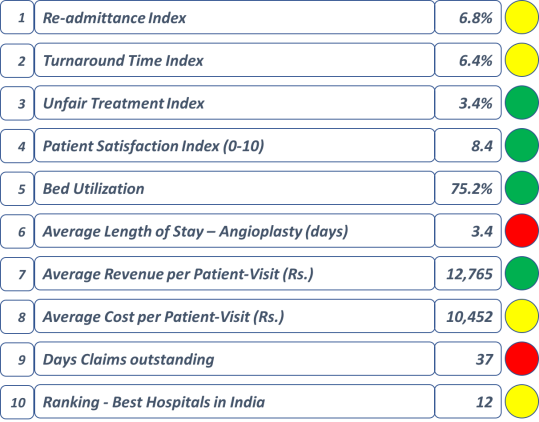

How does the CEO keep track of the above-mentioned top KPIs? The CEO’s dashboard could display the current status of the KPIs, available at any time at the click of a button (literally putting those on fingertips). A typical dashboard containing the critical KPIs could look like as shown below:

(The numbers and the traffic-light shown against each KPI in the dashboard are for illustration purpose only and do not represent any industry benchmark or desired value)

The above list contains the typical KPIs critical for gauging any hospital’s performance on various operational and financial parameters. However depending upon the criticality for a particular hospital, different and more relevant KPIs could replace those less relevant for that hospital.

By design, I have not included any KPIs or insights produced by clinical analytics, as those will be specialized and specific to each individual hospital.

My suggestion is that let the CEOs use their fingertips for recalling critical tricks of their trade and expertise only, and let an analytics system recall the KPIs for them whenever needed for reference!

[Glossary:

ACPV – Average Cost per Patient-Visit; ALOS – Average Length of Stay; ARPV – Average Revenue per Patient-Visit; DCO – Days Claims Outstanding; KPI – Key Performance Indicator; P-SAT – Patient Satisfaction; TAT – Turn-around Time]

In the last post – ROI on analytics – is it always easily measurable? Part I – I had explained how the ROI for an analytics implementation could be estimated, measured, and determined.

My perspective on ROI measurement

To recap from that post, there are primarily two approaches to measuring the ROI on analytics, depending upon the objective for which the analytic solution has been deployed.

Either you

- Measure the ROI comparing the (projected/achieved) gains with the deployment cost; or

- Measure the ROI comparing the losses averted/risks mitigated with the deployment cost.

The methods I explained in the last post were primarily for scenarios where the BI/BA solution has been implemented to gain business benefits.

In today’s post I will attempt to discuss the second approach that deals with measuring ROI when analytics is used as mitigation for preventing losses or risks.

Measuring ROI when anticipating loss prevention/risk mitigation

In case analytics is used for risk mitigation or prevention of losses, the measurement of ROI generally becomes extremely complex .

That is primarily because of two reasons.

Firstly, when we talk of loss prevention, it stands to reason that loss is not certain but can be avoided or reduced by mitigation. Thus loss happening as an event itself is not certain but depends upon the probability of a vulnerability being exploited, and upon the extent of impact of the resultant threat. This brings uncertainties in predicting to what degree an event could be averted or loss reduced by introducing mitigating measures.

Secondly (as later examples will demonstrate), it could be really complex to put a monetary value to the loss that might have occurred if the system was not deployed, but has been averted/reduced by deploying the system.

For example, how could you really accurately estimate in monetary terms the losses that ‘could have’ happened but ‘did not’, like

- identity theft

- loss of information

- hacking of a site

- compromise of customer data

- human lives impacted

- impact on reputation.

Success of an analytics system deployed for preventing losses is dependent upon the (probabilistic) events not happening, or happening with lesser impact. And for measuring the monetary impact of what has not happened, we will be required to delve into complex algorithms, modelling, and venturing into the exotic but very unreal world of probabilities and predictions.

This may be evident from the following few examples taken from different industries.

A) Fraud detection and reducing NPAs

Let us take four scenarios from Financial Services and attempt determining the ROI on analytics solutions deployed.

Credit risk rating while approving credit

A bank can use analytics to prevent/reduce fraud by weeding out at the credit approval stage itself those credit applicants who are likely to default. By successfully implementing such a solution, the bank may be able to reduce the credit defaults, and thus improve the quality of its credit portfolio considerably.

But to estimate what would have been the default if the risk was not mitigated (those applicants were provided credit) is really very difficult if not impossible. That is because if credit has been declined, and the applicant has been treated as non-creditworthy, the quantum of credit that could have been provided is pure conjecture.

Thus, we can measure the impact of such a system more in qualitative terms than monetary, as shown below (Figure 1).

Figure 1 – Analytics for risk scoring

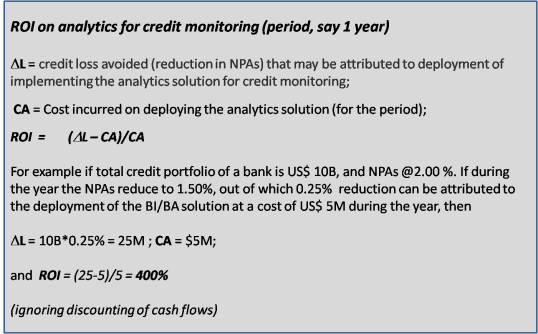

Reducing NPAs (credit monitoring)

This is one scenario where impact of analytics may be relatively accurately measured.

We can use BI/BA very effectively in credit monitoring to detect any behavior and/or transaction patterns that could indicate potential default by the customers, and to act upon those insights to salvage the account. In such a scenario the resultant reduction in NPAs will give a fair measurement of the impact of the analytics system, as shown below (Figure 2).

Here again the challenge is to estimate what portion of the reduction can be attributed to the deployment of the BI/BA solution.

Figure 2 – Analytics for reduction of NPAs

Value at Risk

Currently BI/BA is extensively used within FIs to accurately measure Value at Risk (Var) of financial assets. With deeper statistical analytics and predictive modeling, an institution may be able to measure VaR/LGD/EAD more reliably, and subsequently determine its Tier I/Tier II capital requirements with fair accuracy .

However, we have to realize that ability to accurately predict VaR (using analytics) by itself does not impact or change the inherent (realizable) value of the underlying assets. Thus it may not materially impact the extent of loss due to NPAs.

With deeper predictive modeling the bank may be able to know whether to provide less or more capital (as per Basel II/III norms), and thus may save (or spend extra) by way of cost of raising capital funds. This may lead to better cash/funds management, but determining any benefits in monetary terms may be complex.

Compliance

I remember that when I joined Bank of India some 38 years back, even then there were available statistical algorithms that could be used to predict whether a loan will go bad. Over the years, the predictive models and simulations for assessing credit risk and market risk in financial institutions have really matured and are now used extensively.

However, emphasis on operational risk has gained impetus during the last 15 -20 years only. That has been necessitated by the following factors:

- Collapse of several leading FIs due to systemic failures

- Focus on compliance in FIs due to various regulatory provisions being put in place like SOX Act, Basel II/III, Patriot Act etc.

Deployment of deep statistical analytics coupled with predictive modeling enables FIs to have a better regime of risk mitigation and monitoring of systemic risks, leading to the desired level of compliance.

These BI/BA applications enable early detection of deviant behaviors, non-compliance, and correlation between probabilistic events. With these systems in place we could also have ability to predict with greater accuracy the probability and impact of occurrence of events identified as systemic threats.

However, it is difficult for us to measure the impact of such systems in monetary terms. We may be able to better gauge the success of the BI/BA systems through qualitative measures like – extent of increase in compliance, number of deviant patterns detected, extent of reduction in risk exposures, and successful retention of reputation, etc.

B) Preventing cyber attacks/thefts

Assume an anti-virus company decides to enhance its service effectiveness by deploying deep analytics to detect likely patterns that could lead to exploitation of vulnerabilities in the system, and takes preventive action in real-time in preventing such cyber attacks like attempts of identity theft, compromising of integrity of data etc.

From the company’s point of view, deployment of analytics leads to a better value proposition for its customers, and thus results in increased sales at may be a premium price realization. They could estimate that incremental sales attributable to analytics deployment and arrive at an ROI.

However, from the user’s point of view, though payment of an additional premium (if any) has resulted in better prevention of losses (disk crash, identity theft, site hacking, etc.) (that might have happened if some other product was used), it is really difficult if not impossible to measure the loss thus averted.

Can the user really estimate that how many times the disk/website was saved from crashing, or what would have been the impact on the organization’s reputation if some customer data was compromised? Probably not very easily!

C) Behavioral profiling

Similarly, take a case at a busy airport where analytic systems may be used to detect behavioral patterns that would alert the authorities to likelihood of smuggling, or for that matter any terrorist activities.

Assume that over a period of one year, with the successful application of such systems there happen to be no untoward incidents at the airport (as they would have all been detected and interrupted before their occurrence). There might have been several lives saved, loss to buildings/planes/assets avoided, and may be any detrimental impact to reputation averted.

Now how could we measure these returns in monetary terms? Putting monetary values to these successes is really complex or may not be even feasible.

Conclusion

I can go on providing similar examples from other industries too, but the above few examples from diverse industries should suffice to clearly bring out the difficulties in measuring the ROI on analytics, if deployed for risk mitigation.

My submission is that though the benefits in such cases may be readily evident, yet putting a monetary value to them may not be always easy.

To summarize my observations let me borrow the phrases from the popular “MasterCard” ads –

[Glossary:

BA – Business Analytics; BI – Business Intelligence; EAD – Exposure at Default; FI – Financial Institution; LGD – Loss Given Default; NPA – Non-Performing Assets; ROI – Return on Investment; SOX – Sarbans-Oxley; VaR – Value at Risk]

In my earlier post – “Delaying investment in analytics may be injurious for the organization’s health” – I had argued that after the due-diligence is carried out. and a decision taken to deploy an analytic solution, any further delay in implementation should be strictly avoided. I reasoned that as the expected ROI was generally a high multiple of the investment, any delay in implementation would mean that much benefits foregone.

However, the question remains whether those benefits would have actually accrued, and is it possible to easily measure those returns that analytics promises?

It is believed that an ROI measurement is more complex in case of an analytic solution compared to say an ERP implementation. That is because the former is not really a transaction processing system, and its success largely depends upon how the stakeholders/users actually take (or do not take) advantage of the insights provided by that analytic solution. You may have a great BI solution in place, but it may still fail if the management ignores the trends/insights the application provides.

In such a scenario, how does one estimate what benefits are likely to accrue if an analytic solution is deployed? And, how does one subsequently measure the ROI on analytics once the solution is deployed?

My perspective on ROI measurement

There are primarily two approaches to measuring the ROI on analytics, depending upon the objective for which the analytic solution has been deployed.

Either you

- Measure the ROI comparing the (projected/achieved) gains with the deployment cost; or

- Measure the ROI comparing the losses averted/risks mitigated with the deployment cost.

In today’s post I will attempt to discuss the first approach, and will take up the second approach in the next post.

Measuring ROI when anticipating gains in business with the analytics implementation

Simplified approach

If you deploy a BA/BI solution with an objective to seeking gain in business/revenue/profitability, the ROI calculation is ‘seemingly’ straight forward and ‘apparently’ not very difficult to measure and monitor.

Typically, you determine the incremental revenue that can be attributed solely to deploying the analytic solution, and reduce from that the incremental cost of sales required to achieve that incremental revenue. Then compare the resultant incremental profit with the cost of deployment of the analytic solution to arrive at the ROI. (see Box 1).

Box 1 – Simplified ROI calculation

ROI calculation taking discounted cash-flows into account

However, this simplified ROI calculation may be substantially off the mark if the times at which revenue inflows and cost outflows occur (or are likely to occur) are quite mismatched, which they generally are. To arrive at a more accurate calculation of ROI, Net Present Value (NPV) of all cash-flows has to be determined as on the date when the ROI is being being base-lined at, and then the ROI formula applied. (See Box 2).

Box 2 – ROI calculation taking discounted cash-flows into account

Thus, by taking the discounted cash-flows into account we might be able to get a more accurate measure of the ROI.

However, both these methods work well primarily while calculating a projected ROI where revenue/costs are still to accrue, but complexity creeps in as soon as you try to determine the ROI of an already implemented BI solution.

How complexity creeps in?

In case we wish to measure the success of a BA/BI solution that has already been implemented, generally the following complexity is encountered:

- To determine whether a sale can be attributed solely to the BA/BI solution deployment (or whether the sale would not have happened if the solution was not implemented) may not be always simple and straight forward;

- Likewise it might be difficult to determine whether the incidence of a cost was attributable to the deployment of the BI/BA solution or not; and

- More importantly, NPVs get impacted due to changes in credit period (given to customers or received from suppliers), thus impacting the ROI either way (+ive/-ive). It might become very complex to segregate the impact of AR/AP periods on the ROI.

An example of complexity

Imagine that your BI solution has been successfully deployed at your organization, and has resulted in substantial gains (as projected) in revenue from a customer.

Consider a scenario where due to increased business, the customer negotiates a higher discount (say from 10% to 12%), and better terms of payment (say 30 days to 40 days). The revised arrangement will surely impact the ROI as margins and NPV of cash-flows will reduce (from what were expected). Now, will your measurement system be granular enough to identify and segregate which portions of revenue and costs were attributable to BI deployment and which were not?

In real-life scenarios, there could be several other factors too impacting the ROI, making such calculations really very complex.

But those calculations still can be carried out! In my opinion, a good rules based engine coupled with basic activity-based costing capability could integrate with the measurement system to determine the ROI of an analytic solution to a fair degree of accuracy. That is because in spite of all (real or perceived) complexities, the relevant data is still available in the operational systems for analysis.

ROI in case where the analytic solution is primarily deployed to avert losses/mitigate risks

However, if the analytic solution is being deployed more as an insurance or as a risk mitigation initiative for preventing risks/losses, it might become difficult to accurately measure its ROI.

More on that in my next post.

[Glossary:

BA – Business Analytics; BI – Business Intelligence; ERP – Enterprise Resource Planning; NPV – Net Present Value; ROI – Return on Investment]

In my last two posts I narrated some of my past experiences with analytics. In this post I take a break from past, and delve into present.

I intend to keep flitting between past and present in my future posts too.

How negotiating a better deal may turn out to being a losing proposition

Recently, a digital marketing company was looking for a business analytics solution that would enable them to predict, with reasonable accuracy, the probability of an inquiry on their portal converting into a potential win. The management believed that with the above solution in place, the company would achieve several direct and indirect benefits, the major ones being:

1) Reduction in its call center strength by 10% (on account of early weeding out of non-serious inquiries )

2) Improvement in its Inquiry-to-Business conversion ratio from 10:1 to 4:1 (on account of ability to put more focus on potential winnable inquiries)

The above benefits, if achieved, were expected to provide an estimated return on investment (ROI) of 720% on their investment cost on this solution over a period of 36 months (fairly reasonable for any BI/BA initiative).

The company had already identified and selected a solution provider, frozen on the system expectations, and was in the process of price negotiation. It so happened that the haggling over price went on for over two months, and ultimately the company was able to extract a 20% discount over what the vendor had earlier termed as its lowest quote.

Now in this whole process, did the company really gain? Apparently yes. But if you engage in a bit deeper analysis, probably not!

By delaying the accrual of direct and indirect benefits by two months, the resultant loss to the company was actually 20% of the investment cost as explained below.

a) Benefits foregone (that would have been available if the analytics system was in place) for 2 months – 40% of the investment cost (720% * 2/36)

b) Savings arsing out of price negotiation – 20%

Net loss = (a) – (b) = 40% – 20% = 20 % of the investment cost.

The above is an example of a scenario where an undue delay in price negotiation led to reduced benefits. However, delay in implementing an analytics solution for reasons other than price negotiation also may result in potential revenue loss, as is evident in the next example.

An example from the IT services industry

An IT services company with over 1,000 billable resources was intending to use a Business intelligence/Business Analytics (BI/BA) solution for significantly improving its resource deployment process. It expected that with a more accurate prediction of the resource demand from its sales teams, the company would be able to optimize and effectively manage its sourcing supply chain, resulting in at least 1% improvement in its resource utilization.

Even after selecting and engaging a competent firm to deploy the desired solution, for various reasons and lethargy shown by internal stakeholders, the implementation got delayed by over 3 months. What the company did not realize that in effect it had foregone the benefit (revenue) that would have accrued by having at least 10 additional resources (1% of billable resources) being billed per month for a period of 3 months. Even with a conservative billing rate of USD 16 per hour, that turns out be > USD 75K (with practically no additional direct cost, as the resource costs were already being incurred irrespective of whether a resource was being billed or not).

This effect (delays in implementation resulting in substantially reduced benefits) is evident not only in BI/BA implementations, but also in process improvement initiatives, as in the latter case too the ROI is proven to be a large multiplier.

That is clearly seen in the next example which is taken from the manufacturing industry.

ERP implementation in a manufacturing company

About 15 years back, a manufacturing company with an annual turnover of Rs. 120 crore (USD 28M those days) intended to implement an ERP system covering its entire operations including Finance. The purpose was to benefit from the implementation of the best practices, and thus gain several direct and indirect benefits. The company expected that with an ERP implementation, the streamlined operations and adoption of best practices would result in at least an increase of 1% in its Net Profit (at that time hovering around 10% of revenue).

I was part of the vendor evaluation panel that was set up to decide on whether to go in for SAP or Oracle Financials.

Even after few months of extensive presentations and competitive pricing by both the vendors, the panel was none the wiser to decide on which ERP to go for. Finally after several more meetings, based on various parameters evaluated, the panel decided on a particular vendor. Now it was the CFO’s turn to step in and take over the final price negotiations, and he expected that process to take another month.

I remember, I offered just one advice to the panel members at that stage. I reminded them that the expected benefits from the implementation were Rs. 10 lac (USD 24K) (1% of Rs. 10 crore) per month. Therefore I reasoned that the CFO should be treated as successful in negotiations only if he was able to extract a discount that was over Rs. 10 lac.

Not delaying does not mean rushing headlong into implementing analytics

The above illustrations just bring out the need of being aware of when one reaches an inflection point in negotiations, beyond which any further delay in decision-making may become counter-productive.

Having said that, I agree that all major BI/BA and process improvement initiatives are undoubtedly (very!) risky affairs. An organization cannot be rushed into taking a hasty decision. If not properly designed or scoped, these implementations might result in significant losses by way of reduced or minimal benefits. Before plunging into any such implementation, the organization has to weigh all the associated risks, and draw up appropriate plans to mitigate those risks.

But what I opine is that, once an organization has completed all due-diligence and decides to go ahead, it is prudent for the decision-makers to be cognizant of the resultant impact of any further delays in implementation.

I had my first brush with what I know now as analytics way back in 1976.

I was a probationary officer with Bank of India, posted then at a small branch in a small town. As part of getting trained in all departments of the Bank, I also worked in the credit department. My job was primarily getting the documentation complete from the borrowers once their loans were sanctioned, and to file those documents appropriately in dockets.

Those were the days of excessive focus on priority sector lending under the Government’s 20-point program. Loans to owner transport operators was one of the focused lending areas, and the branch had a dozen customers who had taken loans for purchase of pre-owned trucks.

While scrutinizing the loan documents prior to an internal audit I was surprised to observe a definite pattern in the documentation provided by the dozen different loan account holders:

- Offers to sell from different unrelated sellers appeared to have come from the same set of stationery (paper texture)

- Similarly, receipts for the sale amount received by various unrelated sellers appeared to have been part of the same cash-receipt booklet

- All cost valuation reports were signed by the same authorized valuer

- Unrelated borrowers had guarantors who were related to each other

- There was a strong correlation between dates of loan disbursement and dates of similar amount deposits in a particular family’s accounts at the branch.

Once these facts were brought to the notice of internal auditors a thorough inspection was ordered for those loan accounts. It was found that assets (old trucks) were grossly overvalued and the sellers and the buyers had colluded to obtain excess funds from the bank. Ultimately the bank had to file legal suits for recovery of those loans.

And what happened to me? Well, the worst thing to happen was that my next appraisal was messed up by the Manager, and the best thing to happen was that the Manager finally dropped the idea of getting his niece married to me. (Just kidding.)